As educators, we are always asking ourselves, are the resources we make available to our students moving the needle? What is the right mix of direct instruction, one on one time, and engaging software? I ask myself these questions every day. We are charged with giving our students the best possible opportunities and experiences for success throughout their schooling years and beyond.

During my 9 years of teaching middle school math, trends have surfaced and sparked an interest in me to dig deeper into the use and efficacy of digital resources, as intervention in my math classes. What started as part of my program to gain administrator certification has led to a two-year research study that sought to prove the efficacy of my work in the classroom and the digital resources we use. In order to obtain objective results, I designed and implemented a way to collect, analyze, and interpret data that compared students who used a digital intervention with those who did not.

When performing research, I gave my class of 8th grade algebra readiness students at Black Mountain Middle School, in San Diego, the opportunity for improvement. Many of these students scored between two and three grade levels below grade-level average on their NWEA MAPs test, creating the need for additional intervention and support to prepare them for success in high school math. As you will see in my research below, pairing a digital supplement with direct teacher instruction effectively increased test scores, raised achievement levels and improved cognitive ability.

Study: Exploring the Effectiveness of Using a Computer-Based Math Intervention Program

Introduction:

Eighth grade students who are below grade level (MAPs scores of 231 and below) are not ready for the current 8th grade course of Algebra. Due to their demonstrated deficits in math knowledge and skills, these students are placed in an introductory course called Algebra Readiness. Many other schools would offer an intervention class for this group of at-risk students, targeting specific critical math skills. However, due to limited funding, there is currently no intervention class offered at our school. Could a computer-based program, such as Learning Upgrade Pre-Algebra, prove to be an effective means to fill the gaps of knowledge and accelerate the growth and math fluency in these specific intervention students?

The research question this study seeked to answer is whether a computer based learning tool (in this study the digital curriculum was provided by Learning Upgrade) could improve student performance on school-wide interim assessments. In recent years, students in Algebra Readiness have not reached optimal growth on Measures of Academic Progress (MAPs). MAPs scores have been shown to directly correlate to student math knowledge and ability and indicate how a student will do in a specific math class. It is essential for these students to reduce/eliminate the gaps in their math knowledge and abilities so they can successfully complete Algebra in high school. A significant growth in MAPs (exceeding yearly expected growth) will show that a student is advancing and ready for more rigorous courses. Variables were controlled as much as possible between the groups studied.

Last year’s study showed that a cost-effective, computer-based augmentation program such as Learning Upgrade can deliver an accelerated growth in students who do not have access to a dedicated intervention class. This year’s study asked the following two questions:

1. Does increased student accountability and refined management of a computer-based augmentation program lead to greater math growth?

2. Do students using a computer-based augmentation program with high accountability make greater math gains than students in a traditional program?

Methods:

The original study was conducted at Black Mountain Middle School in Poway Unified School District, San Diego, California during SY 2013-14, with 63 total 8th grade Algebra Readiness students, spread between two sections. The original study compared the 63 students, who used the Learning Upgrade program throughout the 2013-2014 school year, with 36 students, from SY 2012-2013, who did not use the program. This year two study was also conducted at Black Mountain Middle School in Poway Unified School District, San Diego, California during SY 2014-15, with 30 8th grade Algebra readiness students.

This year, the teacher implemented lessons learned from the first study to increase accountability and refine management. This new study consisted of two comparisons. The first comparison was a class of 30 students, who used the Learning Upgrade program as an augmentation this year, with last year’s 63 students who also used the Learning Upgrade program. This portion of the study compared the MAPs growth results and Learning Upgrade completion, between the two classes. The teacher and curriculum were consistent in both studies.

The second comparison involved the class of 30 students, who used the Learning Upgrade program this year, with 21 students in another 8th grade Algebra Readiness class, who did not use the Learning Upgrade program. The course curriculum was the same; however, the teachers were different. Like the year one study, the students were from a very diverse population, with varying abilities and gaps. These students have struggled in math from year to year and are not yet ready for the rigor of the 8th grade Algebra class. The purpose of Algebra Readiness is to increase math ability and fill knowledge gaps so students can succeed in Algebra in 9th grade.

This study utilized the MAPs test as the primary source to show change/growth. MAPs was given in the Fall (October), as a baseline. It was administered again in Winter (February) and Spring (May.)

Like last year, students began using the Pre-Algebra Learning Upgrade course early in the school year. One day a week was dedicated to Learning Upgrade in class throughout the year. Additionally, students were expected to do at least 1 hour (20 min 3x) per week, at home. In class, students received a netbook/headphones and worked independently on their current level of Learning Upgrade, while the teacher would circulate and offer help as needed. The teacher would also put up the Student Monitor screen in class so students could see their current levels.

Based on lessons learned from last year, students, this year, were held accountable for completing at least two Learning Upgrade levels per week. Each level should take 15-20 minutes to complete and levels can be repeated for more practice and an improved completion score. Students would get a grade as to whether they met the expected level of completion for each week. Each week, on Mondays, a progress sheet went home, for parent signature, showing the student’s current level and expected level to date. Completion of the entire 60 level program was paced out so that all students, completing the required 2 levels per week, could comfortably finish the entire 60 level program by May.

Fall, Winter, and Spring MAPs results were pulled from NWEA (Northwest Evaluation Association), through our district Student Reports Center. Learning Upgrade completion status was pulled through the Learning Upgrade program teacher interface. A comparison was made with the Fall, Winter, and Spring MAPs scores between the different 8th grade Algebra Readiness students in this year’s study. Additionally, how far a student worked through the Learning Upgrade program during the time of the study was analyzed.

Results:

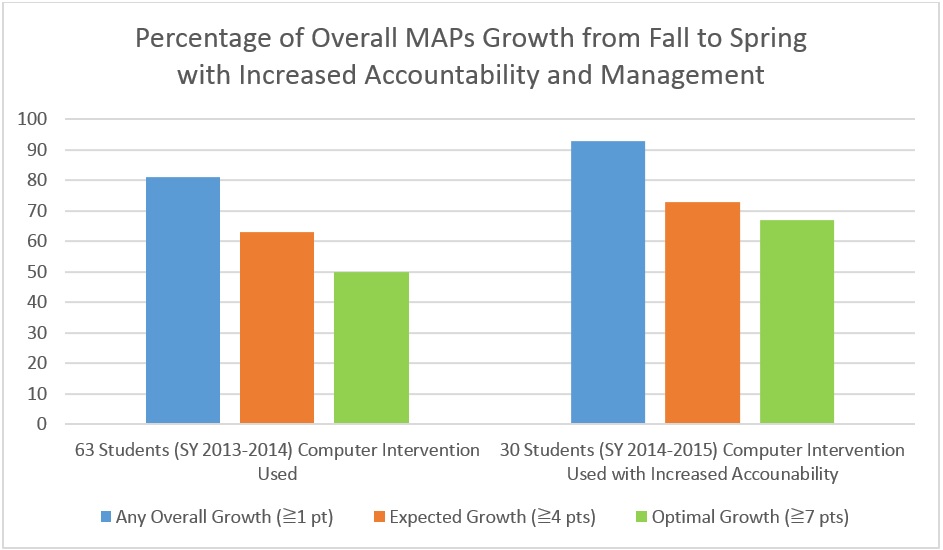

The primary focus of this year’s study compared student MAPs growth of two groups of participants who had used the Learning Upgrade for an entire year to determine if this year’s increased accountability and refined management yielded greater MAPs growth. Over the course of a school year, expected MAPs growth for a student is 4 points, while optimal MAPs growth is 7 points.

This part of the study found the following results:

• Implementing increased weekly accountability and refining the management, based on the lessons learned from the first study, “any overall growth” and “expected growth” both grew by about 10% from the previous year, while “optimal growth” grew by 17%.

• An added benefit was that students and parents were aware of the expectations and student progress, each week, throughout the school year.

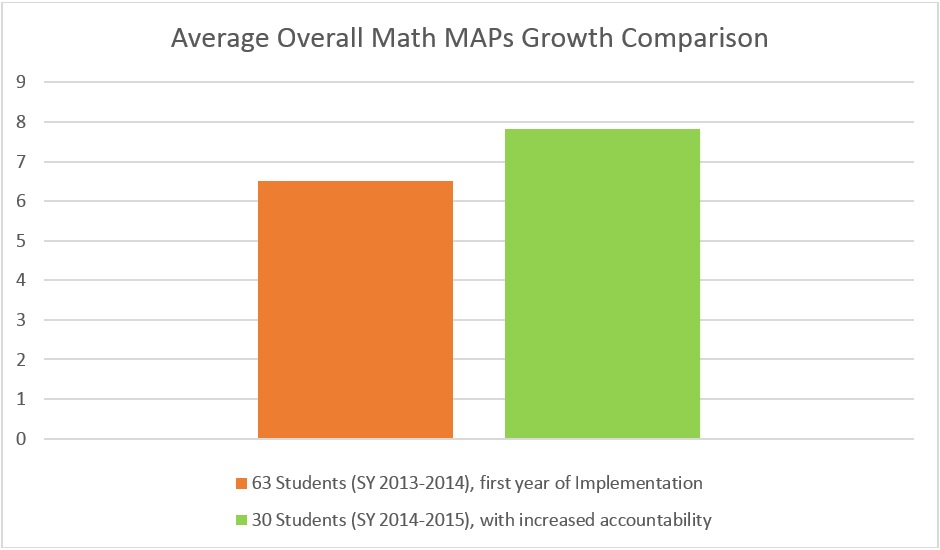

• When looking at average overall math MAPs growth, this year’s class, with increased accountability and explicit expectations, grew 7.83 points (optimal growth), on average, where last year’s students, grew 6.5 points (just below optimal growth), on average.

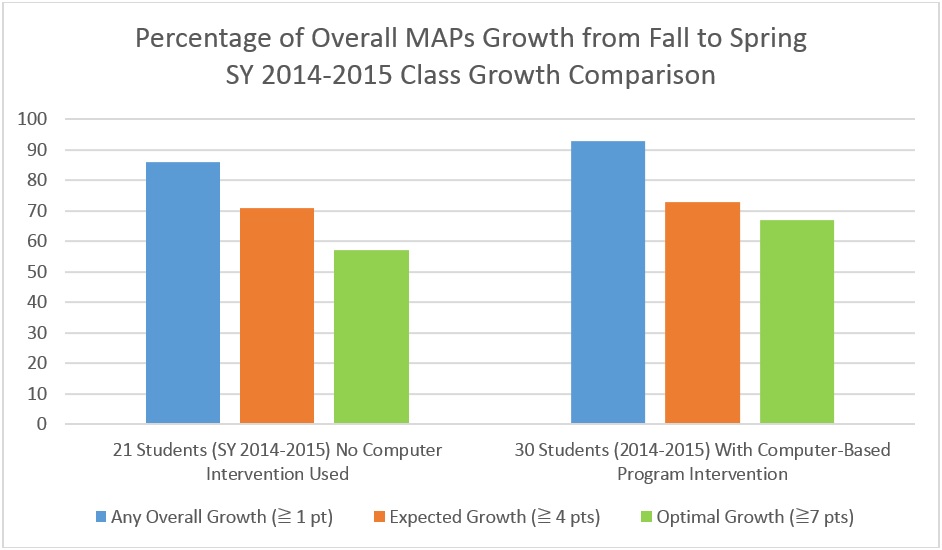

A secondary focus of the study compared two groups of students from SY 2014-2015. One class of 30 Algebra Readiness students, who used the Learning Upgrade program, was compared with one class of 21 Algebra Readiness students, who did not use the Learning Upgrade program.

This part of the study found the following results:

• The students who used the Learning Upgrade program this year, in addition to the standard Algebra Readiness curriculum, showed increased growth, over the students that did not use it. The class that used it showed an “any overall growth” rate of 7% higher; an “expected growth” rate of 2% higher; and an “optimal growth” rate of 10% higher.

• These increased growth results reinforce that this program can serve as a cost-effective math augmentation program.

A t-test analysis was conducted for each part of this study. The first t-test compared the Fall to Spring MAPs scores growth for the 63 students in SY 2013-2014 with the 30 students in SY 2014-2015. Since these were two sample groups, compared for the same time period, an independent t-test was conducted. The t-test had a combined number of 90 participants. The mean was 1.1667 and the two-tailed P = 0.420053.

The second t-test compared the two SY 2014-2015 classes. This was also an independent t-test. It had a combined number of 51 participants. The mean was 0.119 and the two-tailed P = 0.960326.

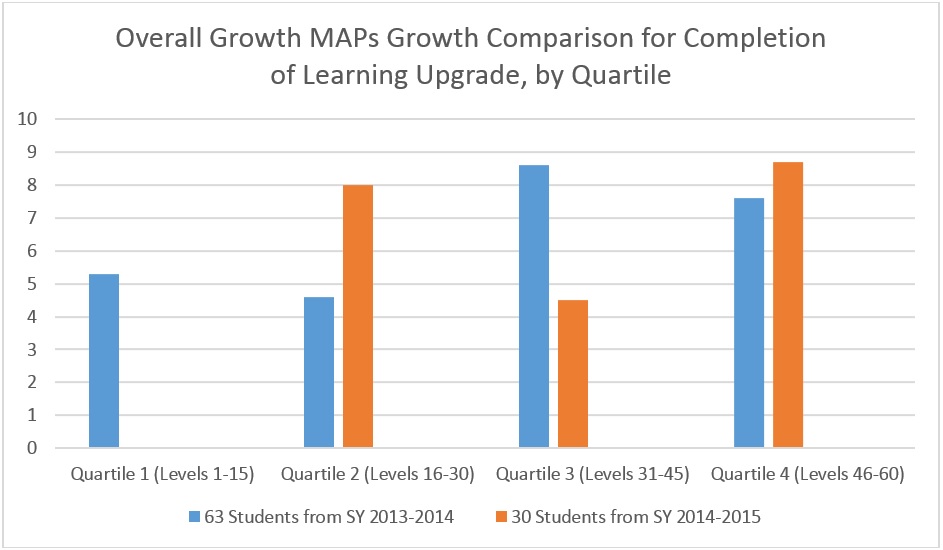

Like the original study last year, a quartile comparison was also conducted. The 60 levels of Learning Upgrade were broken down into quartiles, each 15 levels. This comparison measured the MAPs growth of students based on the highest level of Learning Upgrade they completed. The results below show the growth differences of each group of students, by quartile.

The above data shows a significant increase in growth for two of the completion quartiles. This year, all students completed at least to quartile 2 and all, but three students, completed to Quartile 3 and 4. The three students, who did not reach quartile 3 completion, finished very close to Quartile 3, which would have increased quartile 3 completion rate significantly. It is notable that when students reach the 4th quartile near completion or completion of the augmentation course, students exceeded 8 points of growth, on average, which exceeds the optimal MAPs growth mark. Additionally, of note is that with increased accountability, 66% of all students finished the entire program, where only 56% of all students finished during the first year study.

Conclusion:

The year one study addressed the question that a computer-based program, such as Learning Upgrade Pre-Algebra, could be an effective means to fill the gaps of knowledge and accelerate the growth and math fluency in these specific intervention students. This year’s study reinforced that the addition of a computer-based augmentation program, such as Learning Upgrade, did indeed improve student growth, as demonstrated by their MAPs scores. Using the lessons learned from Year One implementation helped structure the intervention and yielded improved student growth over year one implementation.

When looking at the student-to-student data comparison, the data shows increased growth in this year’s classes over last year’s classes. The year two quartile study, like year one, showed increased growth for those that finished at least half of the 60 level program. One can conclude, from the data, that for students who made substantial progress in the course (completion or near completion), optimal growth was the norm.

The primary two-tailed P = 0.420053 result means that the data shows that the results are not random and that there is a systemic cause for the increased growth. There is significant enough difference between the two groups to definitely conclude that adding the increased accountability and management procedures increased student completion and thereby, yielded increased student growth. Although, the secondary study two-tailed P = 0.960326 result means that there is not significant enough difference between the two groups to determine a systemic cause for the increased growth, it is significant that the students, who used the Learning Upgrade program, as an augmentation to the math curriculum, had more growth, overall, in all three categories, than those who did not use it. The smaller number of participants in this secondary study may have affected the statistical outcome.

Reflection:

This next school year brings a new 8th grade course to the state of California and our school district. Beginning with SY 2015-2016, the at-risk students who have been taking Algebra Readiness will be taking 8th grade Common Core Math. It will be even more important to ensure that these students continue to be identified and that their knowledge gaps be filled through an intervention program/class.

Our school is trying to find the best time for a specific school-wide intervention program. In the interim, I would like to see these at-risk students continue to use an augmentation program, such as Learning Upgrade, to improve their math skills, address the gaps in their math knowledge, and thereby, show significant growth on MAPs.

This next year, I plan to have my at-risk students, use the grade-level specific Common Core Learning Upgrade course alongside their current math curriculum. As discussed in last year’s study, I would prefer to find additional time in the school day to use this intervention. That will likely be as an elective, during part of lunch, or as an afterschool tutorial commitment.

No matter how the intervention piece looks next year, it is essential to have:

• consistency

• increased accountability, and

• student commitment

This combination will maximize the benefit from a computer-based augmentation program.

Digital learning is the way of the future, but not a replacement for genuine student-teacher interaction. With a solid balance of accountability, direct and 1-on-1 instruction, and digital intervention, we can realize optimal student growth and a double-digit increase in test scores can be achieved.